Retrofitting Word Vectors to Semantic Lexicons

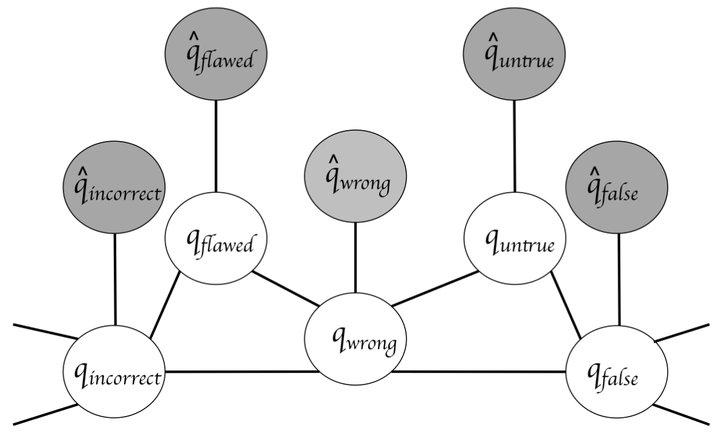

Observed and inferred word vector representations (Faruqui et. al.)

Observed and inferred word vector representations (Faruqui et. al.)

A vectorized iterative implementation of the paper “Retrofitting Word Vectors to Semantic Lexicons” in which the vector space representations are further refined using relational information from the semantic lexicons. Word vectors are usually learned from distributional information of words in large corpora but they don’t have valuable information that are contained in semantic lexicons such as WordNet, FrameNet and the Paraphrase Database. This implementation based on the source paper refines vector space representations using relational information from semantic lexicons by encouraging linked words to have similar vector representations, making no assumptions about how the input vectors were constructed.